Usability

Plastic Fantastic Sunday, November 07, 2021

I'm sure it's clear from everything I write, but I am, as they say, an Old.

Part of that involves going through many technological transitions: computers; cars; TV; cable, satellite; cell phones; BBS systems; internet; touch-tone phones... I mean, it goes on and on.

Many of those are major advances; certainly, I wouldn't want to go back to the days before computing was ubiquitous. At least, I don't think I would. Probably.

Ch-ch-changes

Music has always been an important part of my life. The first thing I ever saved up to buy, in a serious way, was a decent stereo: a Sansui integrated amp, Thorens turntable, Nakamichi TX-2 tape deck and a pair of AVID 230 speakers. I think nearly all of two summers' wages went into this, and the rack to put it in. (Sadly, it was later all stolen out of my dorm room in college.)

Over the years, along with that equipment came a lot of music starting, of course, with LPs. (Embarrassingly, I think the first album I bought with my own earnings was the Jaws soundtrack. It's a great soundtrack, but geez...nerd.)

For the most part, over the years changes to "music storage" were motivated by either "convenience" (vinyl LPs weren't something you could play "portably"; CDs took up space; etc) or "quality" (much less common, but CDs were an attempt to be better and more robust than vinyl; basically all other attempts at improving quality have failed in the broad market -- people don't seem to care).

But at each point, something was lost in the transition. Leaving cassettes aside, the transition from vinyl to CDs basically lost the album art and liner notes. The move to digital lost the physical media entirely, so even the size-reduced "cover" was gone, tactile experience lost. And streaming has been, well, awful for artists and completely eliminated the whole idea of "owning" an album...you almost don't even care whether you've "selected" something...with no cost, there's no need to engage, research, or even think about it.

Sure, streaming service. Hey, iTunes. Just put on something I'd like. Whatever.

The Wayside

But, in that ubiquity, we've left so much behind.

The whole experience of music stores; talking with a proprietor whose tastes help you find new things (hi, long-ago-closed Tom's Tracks in Providence!; hey there, long-ago-closed Town and Campus in Plymouth!); flipping through bins of beautiful covers; reading Robert Christgau's haiku-like descriptions of music in his guide; trying to figure out what the hell he was trying to say.

And then, selecting—sometimes on instinct—and bringing it home. Committing real money...and being invested in what's going to happen. The experience of opening an album; coaxing the disc out of the sleeve; placing it on a turntable, cleaning it, carefully lining up the stylus with the lead-in groove and...with the descent...a soft pop...and, somehow, music, taking you on a journey.

Comprehension

I look at a CD and I kind of understand the deal with how it functions. You take waveform in the analog domain, where we all live. You sample that at a rate that basically works. You record the numbers. You burn the bits to a disc. It basically makes sense. It's a scheme I could have thought up.

Mono music is kind of the same. Waveform makes wiggles. You trace the wiggles on a disc. You play back the wiggles. I get it.

Stereo, though, I just don't know. 45-degree cuts? A single groove that somehow produces two channels? I mean, the music is literally right there. It's entirely visible with your eyes, and yet so mysterious. It's not something I would have thought of. Analog is a kind of crazy, weird thing.

There's some sort of magic going on.

Experience

Sure, there are limitations, as there are with everything. As "receivers" of the information we're profoundly limited.

We're also limited in attention, which has, at least in my case, gotten worse over the years. Albums, though, are something you can't really ignore, because in 17 minutes or so they demand your attention as you need to lift, flip, clean and start side 2.

It's an experience that has to be planned by the musicians. Sequencing. Time between tracks. The side break. The inner sleeve, gatefold, cover, type, art...all of it working together, encouraging attention, focus.

Focus

And I think that's part of it. Vinyl encourages—requires—attention. You have to be an active listener.

It can clearly sound just as good (or bad) as any other method of music storage and reproduction. I'm not going to make claims of some sort of sonic miracle that occurs when stylus hits groove. But I am going to say that everything, as a whole, is just so much more enjoyable.

Your own focus makes you hear things. And the trip the musicians planned for you takes you somewhere. They tell you a story. You just have to listen.

You have to focus.

It Came from the Basement

So, up from the basement came my old records. A turntable from years ago, restored and plugged in.

With the spinning, the vibration, the concentration, a broad smile. It's really good to have this music ritual back.

And amazing to discover what you can hear when you give yourself the time and space to focus.

I Like Batteries! Friday, August 07, 2020

I've probably mentioned on the blog before that I'm a big fan of electric cars.

Starting in 2013 with a first generation Tesla Model S P85, both Z and I have been powered by electrons, with two leased BMW i3s and we've now replaced the Tesla with a Porsche Taycan.

I was a guest on the I Like Batteries podcast, and like a little yapping dog who just won't shut up, I overstayed my welcome, so it was split into two parts. Part one went live today, and part two drops next week.

Enjoy! (That should probably end in a question mark, or be surrounded by scare quotes.)

https://pod.co/ilikebatteries/030-dave-nanian-likes-battery-powered-cars-part-1-of-2

Hoops, Jumped Through Tuesday, November 12, 2019

I don't talk about it much on the blog, but I get an enormous amount of support email. The quantity can be overwhelming at times, and without some automation, it would be nearly impossible for me to do it alone.

Which I do. Every support reply, in all the years we've been here, has been written by me. (Yes, even the Dog's auto response. Sorry, he can't really type. But wouldn't it be sweet if he could?)

No Downtime

The basic problem with being a small "indie" shop is quite simple: you get no time off. I've literally worked every single day since starting Shirt Pocket, without fail, to ensure users get the help they request in their time of need. It's just part of the deal.

But, every so often you need a break, and to try to enforce the "less work" idea there, I try to bring something other than a Mac...since that means I can't do development, but can respond to users as needed.

Automation: It's Not Just for Print Bureaus

Many of the support requests are sent through the "Send to Shirt Pocket" button in the log window, especially when people want help determining what part of their hardware is failing. That submission includes a ZIP file of the settings involved in the backup, which contains the log, some supplementary diagnostic information, and any SuperDuper! crash logs that might have occurred.

One of the first things I did to automate my workflow, beyond some generally useful boilerplate, was to use Noodlesoft's Hazel to detect when I download the support ZIP from our tracking system.

When Hazel sees that happen, it automatically unzips the package, navigates through its content, pulls the most recent log and diagnostic information, and presents them to me so I can review them.

It's a pretty useful combination of Hazel's automation and a basic shell script, and I've used this setup for years. It's saved countless hours of tedium...something all automation should do.

Seriously if you have a repetitive task, take the time to automate it—you'll be happy you did.

Two Years Ago

So, a couple of winters ago, in order to fulfill the "try not to work a lot on vacation" pledge, I took a cellular connected iPad Pro along as my "travel computer". While it was plenty fast enough to do what I needed to do, the process of dealing with these support events was convoluted, at best.

I had to use a combination of applications to achieve my goal, and when that become tiresome (so much dragging and tapping and clicking), I couldn't figure out how to automate it with Workflow.

Now, I'm not inexperienced with this stuff: I've been writing software since something like 1975. But no matter what I tried, Workflow just couldn't accomplish what I wanted to do. Which made the iPad Pro impractical as my travel computer: I just couldn't work efficiently on it.

(I know a lot of people can accomplish a lot on an iPad. But, this was just not possible.)

One Year Ago

So, the next year, I decided to purchase a Surface Go with LTE. It's not a fast computer, but it's small and capable, and cheap: much cheaper than the iPad Pro was.

And, by using the Windows Subsystem for Linux, in combination with PowerShell, I was able to easily automate the same thing I was doing with Hazel on macOS.

I was rather surprised how quickly it came together, with execution flow passing trivially from Windows-native to Unix-native and back to Windows-native.

This made traveling with the Surface Go quite nice! Not only does the Surface Go have a good keyboard, I had no significant issues during the two vacations I took with that setup, plus it was small and light.

This Year

But I'm not always out-and-about with a laptop, and sometimes support requests come in when I've just got a phone.

With iOS, I was back to the same issues that iPadOS had: there was no good way to automate the workflow. Even with iOS/iPadOS 13, it could not be done.

In fact, iOS 13 made things worse: even the rudimentary process I'd used up until iOS 12 was made even more convoluted, with multiple steps going from a Download from the web page into Files, and then into Documents, and then unzipping, and then drilling down, and then scrolling, opening, etc.

On a iPhone, it's even worse.

Greenish Grass

Frustrated by this, a few weeks ago I purchased a Pixel 4, to see how things had progressed on the Android front.

I hadn't used an Android phone since the Galaxy S9, and Google continues to move the platform forward.

As I said in a "epic" review thread

iOS and Android applications are kind of converging on a similar design and operational language. There are differences, but in general, it's pretty easy to switch back and forth, save for things that are intentionally hard (yes, Apple, you've built very tall walls around this lovely garden).

And while Android's security has, in general, improved, they haven't removed the ability to do some pretty cool things.

And one of those cool things was to actually bring up my automatic support workflow.

Mischief, Managed

Now, given you can get a small Linux terminal for Android, I probably could have done it the same way as with Windows, with a "monitoring" process that then called a shell script that did the other stuff just like before.

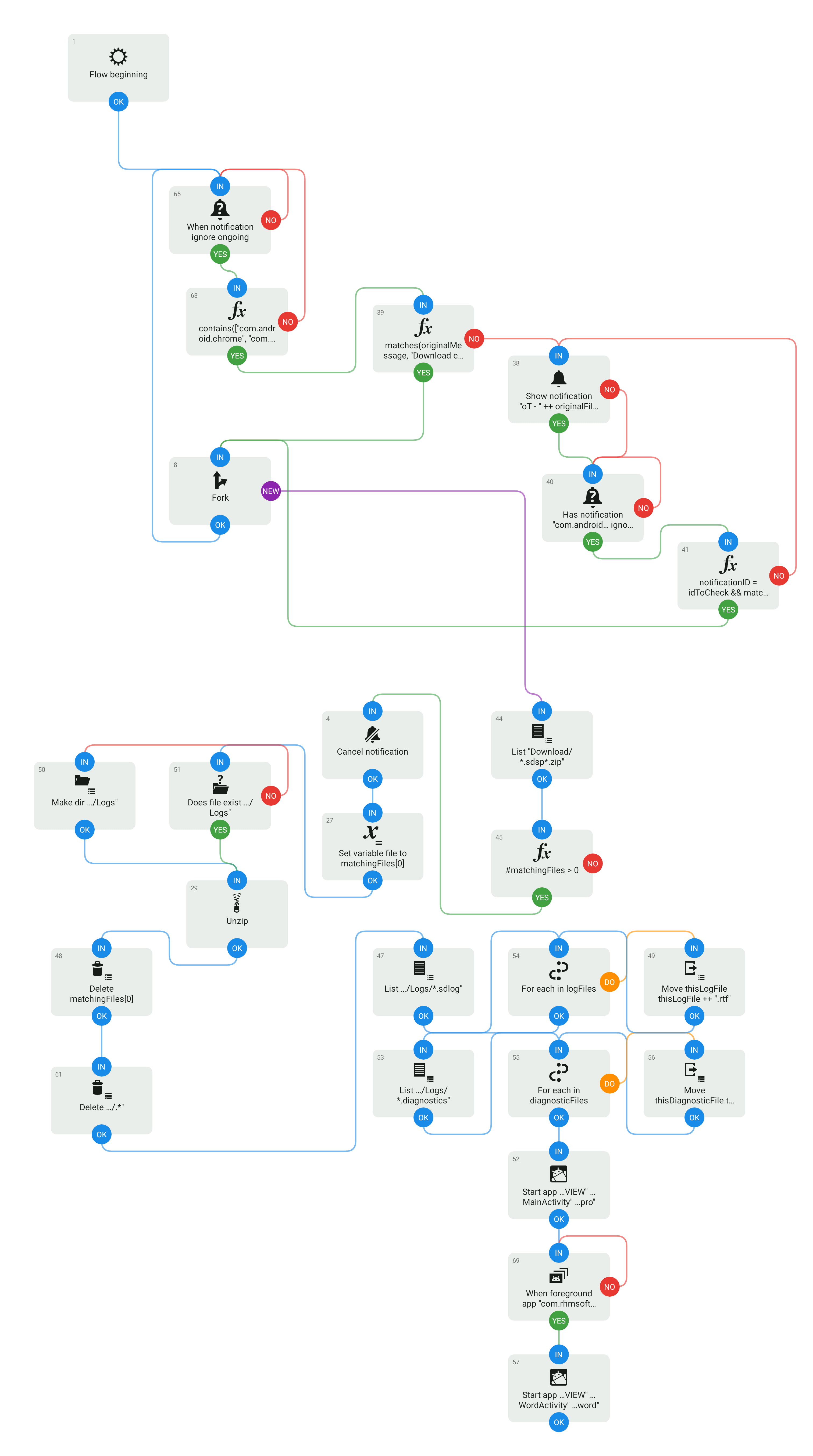

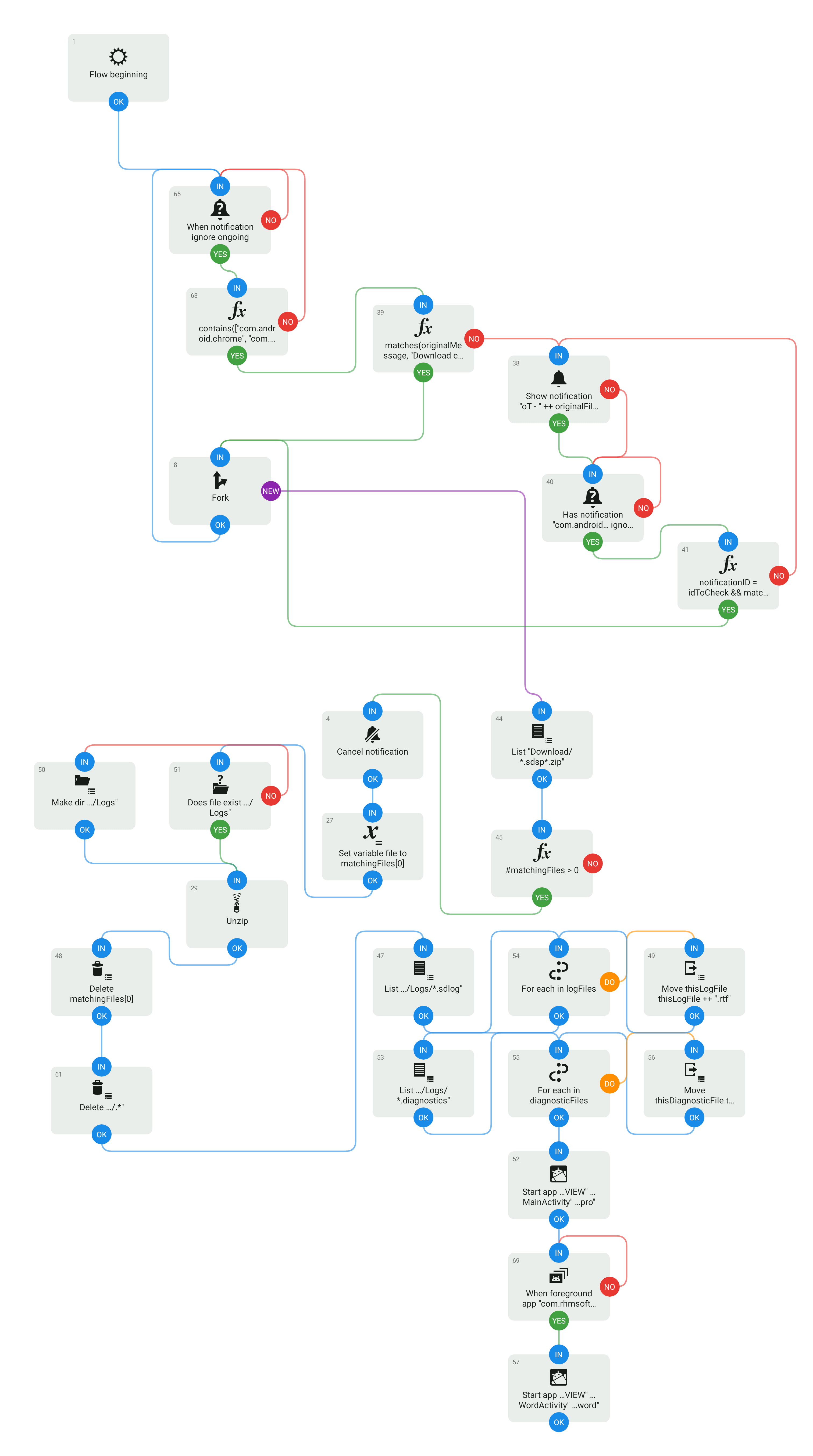

But, instead, I decided to try using Automate, a neat little semi-visual automation environment, to do it. And within about two hours, including the time needed to learn Automate, it was up and running.

I'm not saying the result isn't nerdy, but it was doable! And that made it entirely practical to respond to people when I'm using a phone, even when they send in a more complex case.

Will that be enough to encourage me to stay on Android? I don't know. But, combined with the other iOS 13 annoyances (apps that get killed when they shouldn't, constant location prompts even after you've said "Allow Always", general instability...so many things), it's been a comparatively pleasant experience...Android has come a long way, even in the last two years.

It's really nice to have alternatives. Maybe I'll just travel with a phone this year!

…But Sometimes You Need UI Monday, November 12, 2018

As much as I want to keep SuperDuper!'s UI as spare as possible, there are times when there's no way around adding things.

For years, I've been trying to come up with a clever, transparent way of dealing with missing drives. There's obvious tension between the reasons this can happen:

- A user is rotating drives, and wants two schedules, one for each drive

- When away from the desktop, the user may not have the backup drive available

- A drive is actually unavailable due to failure

- A drive is unavailable because it wasn't plugged in

While the first two cases are "I meant to do that", the latter two are not. But unfortunately, there's insufficient context to be able to determine which case we're in.

Getting to "Yes"

That didn't stop me from trying to derive the context:

I could look at all the schedules that are there, see whether they were trying to run the same backup to different destinations, and look at when they'd last run... and if the last time was longer than a certain amount, I could prompt. (This is the Time Machine method, basically.)

But that still assumes that the user is OK with a silent failure for that period of time...which would leave their data vulnerable with no notification.

I could try to figure out, from context (network name, IP address), what the typical "at rest" configuration is, and ignore the error when the context wasn't typical.

But that makes a lot of assumptions about a "normal" context, and again leaves user data vulnerable. Plus, a laptop moves around a lot: is working on the couch different than at your desk, and how would you really know, in the same domicile? What about taking notes during a meeting?

- How do you know the difference between this and being away from the connection?

- Ditto.

So after spinning my wheels over and over again, the end result was: it's probably not possible. But the user knows what they want. You just have to let them tell you.

And so:

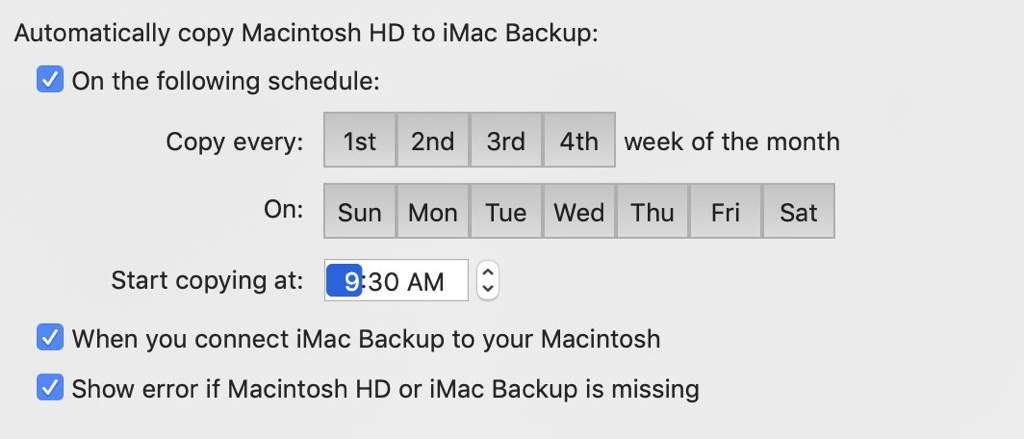

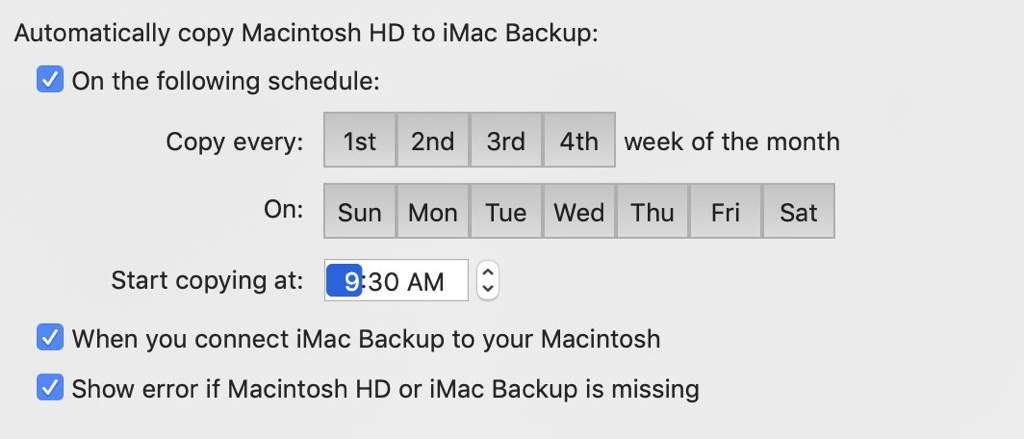

The schedule sheet now lets you indicate you're OK with a drive being missing, and that we shouldn't give an error.

In that case, SuperDuper won't even launch: there'll just be blissful silence.

The default is checked, and you shouldn't uncheck it unless you're sure that's what you want...but now, at least, you can tell SuperDuper what you want, and it'll do it.

And who knows? Maybe in the future we'll also warn you that it's been a while.

Maybe.

He's Dead, Jim

If you're not interested in technical deep-dives, or the details of how SuperDuper does what it does, you can skip to the next section...although you might find this interesting anyway!

In Practices Make Perfect (Backups), I discuss how simpler, more direct backups are inherently more reliable than using images or networks.

That goes for applications as well. The more complex they get, the more chances there are for things to go wrong.

But, as above, sometimes you have no choice. In the case of scheduling, things are broken down into little programs (LaunchAgents) that implement the various parts of the scheduling operation:

sdbackupbytime manages time-based schedulessdbackuponmount handles backups that occur when volumes are connected

Each of those use sdautomatedcopycontroller to manage the copy, and that, in turn, talks to SuperDuper to perform the copy itself.

As discussed previously, sdautomatedcopycontroller talks to SuperDuper via AppleEvents, using a documented API: the same one available to you. (You can even use sdautomatedcopycontroller yourself: see this blog post.)

Frustratingly, we've been seeing occasional errors during automated copies. These were previously timeout errors (-1712), but after working around those, we started seeing the occasional "bad descriptor" errors (-1701) which would result in a skipped scheduled copy.

I've spent most of the last few weeks figuring out what's going on here, running pretty extensive stress tests (with >1600 schedules all fighting to run at once; a crazy case no one would ever run) to try to replicate the issue and—with luck—fix it.

Since sdautomatedcopycontroller talks to SuperDuper, it needs to know when it's running. Otherwise, any attempts to talk to it will fail. Apple's Scripting Bridge facilitates that communication, and the scripted program has an isRunning property you can check to make sure no one quit the application you were talking to.

Well, the first thing I found is this: isRunning has a bug. It will sometimes say "the application isn't running" when it plainly is, especially when a number of external programs are talking to the same application. The Scripting Bridge isn't open source, so I don't know what the bug actually is (not that I especially want to debug Apple's code), but I do know it has one: it looks like it's got a very short timeout on certain events it must be sending.

When that short timeout (way under the 2-minute default event timeout) happens, we would get the -1712 and -1701 errors...and we'd skip the copy, because we were told the application had quit.

To work around that, I'm no longer using isRunning to determine whether SuperDuper is still running. Instead, I observe terminated in NSRunningApplication...which, frankly, is what I thought Scripting Bridge was doing.

That didn't entirely eliminate the problems, but it helped. In addition, I trap the two errors, check to see what they're actually doing, and (when possible) return values that basically have the call run again, which works around additional problems in the Scripting Bridge. With those changes, and some optimizations on the application side (pro tip: even if you already know the pid, creating an NSRunningApplication is quite slow), the problem looks to be completely resolved, even in the case where thousands of instances of sdautomatedcopycontroller are waiting to do their thing while other copies are executing.

Time's Up

Clever readers (Hi, Nathan!) recognized that there was a failure case in Smart Wake. Basically, if the machine fell asleep in the 59 seconds before the scheduled copy time, the copy wouldn't run, because the wake event was canceled.

This was a known-but-unlikely issue that I didn't have time to fix and test before release (we had to rush out version 3.2.3 due to the Summer Time bug). I had time to complete the implementation in this version, so it's fixed in 3.2.4.

And with that, it's your turn:

Download SuperDuper! 3.2.4

The Best UI is No UI Saturday, October 27, 2018

In v3.2.2 of SuperDuper!, I introduced the new Auto Wake feature. It's whole reason for being is to wake the computer when the time comes for a backup to run.

Simple enough. No UI beyond the regular scheduling interface. The whole thing is managed for you.

But What About...?

I expected some pushback but, well, almost none! A total of three users had an issue with the automatic wake, in similar situations.

Basically, these users kept their systems on but their screens off. Rather inelegantly, even when the system is already awake, a wake event always turns the screen on, which would either wake the user in one case, or not turn the screen off again in the other due to other system issues.

It's always something.

Of course, they wanted some sort of "don't wake" option...which I really didn't want to do. Every option, every choice, every button adds cognitive load to a UI, which inevitably confuses users, causes support questions, and degrades the overall experience.

Sometimes, of course, you need a choice: it can't be helped. But if there's any way to solve a problem—even a minor one—without any UI impact, it's almost always the better way to go.

Forget the "almost": it's always the better way to go. Just do the right thing and don't call attention to it.

That's the "magic" part of a program that just feels right.

Proving a Negative

You can't tell a wake event to not wake if the system is already awake, because that's not how wake events work: they're either set or they're not set, and have no conditional element.

And I mentioned above, they're not smart enough to know the system is already awake.

Apple, of course, has its own "dark wake" feature, used by Power Nap. Dark wake, as is suggested by the name, wakes a system without turning on the screen. However, it's not available to 3rd party applications and has task-specific limitations that tease but do not deliver a solution here.

And you can't put up a black screen on wake, or adjust the brightness, because it's too late by the time the wake happens.

So there was no official way to make the wake not wake the screen available to 3rd parties.

In fact, the only way to wake is to not wake at all...but that would require an option. And there was no way I was adding an option. Not. Going. To. Happen.

Smart! Wake!

Somehow, I had to make it smarter. And so, after some working through the various possibilities... announcing... Smart Wake! (Because there's nothing like driving a word like "Smart" into the ground! At least I didn't InterCap.)

For those who are interested, here's how it works:

- Every minute, our regular "time" backup agent looks to see if it has something to do

- Once that's done, it gathers all the various potential schedules and figures out the one that will run next

- It cancels any previous wake event we've created, and sets up a wake event for any event more than one minute into the future

Of course, describing it seems simple: it always is, once you figure it out. Implementing it wasn't hard, because it's built on the work that was already done in 3.2.2 that manages a single wake for all the potential events. Basically, if we are running a minute before the backup is scheduled to run, we assume we're also going to be running a minute later, and cancel any pending wakes for that time. So, if we have an event for 3am, at 2:59am we cancel that wake if we're already running.

That ensures that a system that's already awake will not wake the screen, whereas a system that's sleeping will wake as expected.

Fixes and Improvements

We've also fixed some other things:

- Due to a pretty egregious bug in Sierra's power manager (Radar 45209004 for any Apple Friends out there, although it's only 10.12 and I'm sure 10.12 is not getting fixed now), multiple alarms could be created with the wrong tag.

- Conversion to APFS on Fusion drives can create completely invalid "symlinks to nowhere". We now put a warning in the log and continue.

- Every so often, users were getting timeout errors (-1712) on schedules

- Due to a stupid error regarding Summer Time/Daylight Savings comparisons, sdbackupbytime was using 100% CPU the day before a time change

- General scheduling improvements

That's it. Enjoy!

Download SuperDuper! 3.2.3

Smarty Pants Monday, September 24, 2018

Executive Summary

SuperDuper 3.2 is now available. It includes

In the Less Smart Days of Old(e)

Since the SuperDuper!'s first release, we've had Smart Update, which speeds up copying by quickly evaluating a drive on the fly, copying and deleting where appropriate. It does this in one pass for speed and efficiency. Works great.

However, there's a small downside to this approach: if your disk is relatively full, and a change is made that could temporarily fill the disk during processing, even though the final result would fit, we're trigger a disk full error, and stop.

Recovery typically involved doing an Erase, then copy backup, which took time and was riskier than we'd like.

Safety First (and second)

There are some subtleties in the way Smart Update is done that can aggravate this situation -- but for a good cause.

While we don't "leave all the deletions to the end", as some have suggested (usually via a peeved support email), we consciously delete files as late as is practical: what we call "post-traversal". So, in a depth-first copy, we clean up as we "pop" back up the directory tree.

In human (as opposed to developer) terms, that means when we're about to leave a folder, we tidy it up, removing anything that shouldn't be there.

Why do we do it this way?

Well, when users make mistakes, we want to give them the best chance of recovery with a data salvaging tool. By copying before deleting at a given level, we don't overwrite them with new data as quickly. So, in an emergency, it's much easier for a data salvaging tool to get the files back.

The downside, though, is a potential for disk full errors when there's not much free space on a drive.

Smart Delete

Enter Smart Delete!

This is something we've been thinking about and working on for a while. The problem has always been balancing safety with convenience. But we've finally come up with a idea (and implementation) that works really well.

Basically, if we hit a disk full error, we "peek" ahead and clean things up before Smart Update gets there, just enough so it can do what it needs to do. Once we have the space, Smart Delete stops and allows the regular Smart Update to do its thing.

Smart Update and Smart Delete work hand-in-hand to minimize disk full errors while maximizing speed and safety, with no significant speed penalty.

Everyone Wins!

So there you go: another completely "invisible" feature that improves SuperDuper! in significant ways that you don't have to think about...or even notice. You'll just see (or, rather, not see) fewer failures in more "extreme" copies.

This is especially useful for Photographers and others who typically deal with large data files, and who rename or move huge folders of content. Whereas before those might fill a drive, now the copy will succeed.

Mojave Managed

We're also supporting Mojave in 3.2 with one small caveat: for the moment, we've opted out of Dark Mode. We just didn't have enough time to finish our Dark Mode implementation, didn't like what we had, and rather than delay things, decided to keep it in the lab for more testing and refinement. It'll be in a future update.

More Surprises in Store

We've got more things planned for the future, of course, so thanks for using SuperDuper! -- we really appreciate each and every one of you.

Enjoy the new version, and let us know if you have any questions!

Download SuperDuper! 3.2

3.2 B3: The Revenge! Wednesday, September 12, 2018

(OK, yeah, I should have used "The Revenge" for B4. Stop being such a stickler.)

Announcing SuperDuper 3.2 B3: a cavalcade of unnoticeable changes!

The march towards Mojave continues, and with the SAE (September Apple Event) happening today, I figured we'd release a beta with a bunch of polish that you may or may not notice.

But First...Something Technical!

As I've mentioned in previous posts, we've rewritten our scheduling, moving away from AppleScript to Swift, to avoid the various security prompts that were added to Mojave when doing pretty basic things.

Initially, I followed the basic structure of what I'd done before, effectively implementing a fully functional "proof of concept" to make sure it was going to do what it needed to do, without any downside.

In this Beta, I've moved past the original logic, and have taken advantage of capabilities that weren't possible, or weren't efficient, in AppleScript.

For example: the previously mentioned com.shirtpocket.lastVolumeList.plist was a file that kept track of the list of volumes mounted on the system, generated by sdbackuponmount at login. When a new mount occurred, or when the /Volumes folder changed, launchd would run sdbackuponmount again. It'd get a list of current volumes, compare that to the list of previous volumes, run the appropriate schedules for any new volumes, update com.shirtpocket.lastVolumeList.plist and quit.

This all made sense in AppleScript: the only way to find out about new volumes was to poll, and polling is terrible, so we used launchd to do it intelligently, and kept state in a file. I kept the approach in the rewritten version at first.

But...Why?

When I reworked things to properly handle ThrottleInterval, I initially took this same approach and kept checking for new volumes for 10 seconds, with a sleep in between. I wrote up the blog post to document ThrottleInterval for other developers, and posted it.

That was OK, and worked fine, but also bugged me. Polling is bad. Even slow polling is bad.

So, I spent a while reworking things to block, and use semaphores, and mount notifications to release the semaphore which checked the disk list, adding more stuff to deal with the complex control flow...

...and then, looking at what I had done, I realized I was being a complete and utter fool.

Not by trying to avoid polling. But by not doing this the "right way". The solution was staring me right in the face.

Block-head

Thing is, volume notifications are built into Workspace, and always have been. Those couldn't be used in AppleScript, but they're right there for use in Objective-C or Swift.

So all I had to do was subscribe to those notifications, block waiting for them to happen, and when one came in, react to it. No need to quit, since it's no longer polling at all. And no state file, because it's no longer needed: the notification itself says what volume was mounted.

It's been said many times: if you're writing a lot of code to accomplish something simple, you're not using the Frameworks properly.

Indeed.

There really is nothing much more satisfying than taking code that's become overly complicated and deleting most of it. The new approach is simpler, cleaner, faster and more reliable. All good things.

Download

That change is in there, along with a bunch more. You probably won't notice any big differences, but they're there and they make things better.

Download SuperDuper! 3.2 B3

Warning: this is a technical post, put here in the hopes that it'll help someone else someday.

We've had a problem over the years that our Backup on Connect LaunchAgent produces a ton of logging after a drive is attached and a copy is running. The logging looks something like:

9/2/18 8:00:11.182 AM com.apple.xpc.launchd[1]: (sdbackuponmount) Service only ran for 0 seconds. Pushing respawn out by 60 seconds.

Back when we originally noticed the problem, over 5 years ago, we "fixed" it by adjusting ThrottleInterval to 0 (found experimentally at the time). It had no negative effects, but the problem came back later and I never could understand why...certainly, it didn't make sense based on the man page, which says:

ThrottleInterval <integer>

This key lets one override the default throttling policy imposed on jobs by launchd. The value is in seconds, and by default, jobs will not be spawned more than once every 10 seconds. The principle behind this is that jobs should linger around just in case they are needed again in the near future. This not only reduces the latency of responses, but it encourages developers to amortize the cost of program invocation.

So. That implies that the jobs won't be spawned more often than every n seconds. OK, not a problem! Our agent processes the mounts changes quickly, launches the backups if needed and quits. That seemed sensible--get in, do your thing quickly, and get out. We didn't respawn the jobs, and processed all of the potential intervening mounts and unmounts that might happen in a 10-second "throttled" respawn.

It should have been fine... but wasn't.

The only thing I could come up with was that there must be a weird bug in WatchPaths where under some conditions, it would trigger on writes to child folders, even though it was documented not to. I couldn't figure out how to get around it, so we just put up with the logging.

But that wasn't the problem. The problem is what the man page isn't saying, but is implied in the last part: "jobs should linger around just in case they are needed again" is the key.

Basically, the job must run for at least as long as the ThrottleInterval is set to (default = 10 seconds). If it doesn't run for that long, it respawns the job, adjusted by a certain amount of time, even when the condition isn't triggered again.

So, in our case, we'd do our thing quickly and quit. But we didn't run for the minimum amount of time, and that caused the logging. launchd would then respawn us. We wouldn't have anything to do, so we'd quit quickly again, repeating the cycle.

Setting ThrottleInterval to 0 worked, when that was allowed, because we'd run for more than 0 seconds, so we wouldn't respawn. But when they started disallowing it ("you're not that important")...boom.

Once I figured out what the deal was, it was an easy enough fix. The new agent runs for the full, default, 10-second ThrottleInterval. Rather than quitting immediately after processing the mounts, it sleeps for a second and processes them again. It continues doing this until it's been running for 10 seconds, then quits.

With that change, the logging has stopped, and a long mystery has been solved.

This'll be in the next beta. Yay!

Technical Update! Thursday, September 06, 2018

SuperDuper! 3.2 B1 was well received. We literally had no bugs reported against it, which was pretty gratifying.

So, let's repeat that with SuperDuper! 3.2 B2! (There's a download link at the bottom of this post.)

Remember - SuperDuper! 3.2 runs with macOS 10.10 and later, and has improvements for every user, not just those using Mojave.

Here are some technical things that you might not immediately notice:

If you're running SuperDuper! under Mojave, you need to add it to Full Disk Access. SuperDuper! will prompt you and refuse to run until this permission has been granted.

Due to the nature of Full Disk Access, it has to be enabled before SuperDuper is launched--that's why we don't wait for you to add it and automatically proceed.

As I explained in the last post, we've completely rewritten our scheduling so it's no longer in AppleScript. We've split that into a number of parts, one of which can be used by you from AppleScript, Automator, shell script--whatever--to automatically perform a copy using saved SuperDuper settings.

In case you didn't realize it: copy settings, which include the source and destination drives, the copy script and all the options, plus the log from when it was run, can be saved using the File menu, and you can put them anywhere you'd like.

The command line tool that runs settings is called sdautomatedcopycontroller (so catchy!) and is in our bundle. For convenience, there's a symlink to it available in ~/Library/Application Support/SuperDuper!/Scheduled Copies, and we automatically update that symlink if you move SuperDuper.

The command takes one or more settings files as parameters (either as Unix paths or file:// URLs), and handles all the details needed to run SuperDuper! automatically. If there's a copy in progress, it waits until SuperDuper! is available. Any number of these can be active, so you could throw 20 of them in the background, supply 20 files on the command line: it's up to you. sdautomatedcopycontroller manages the details of interacting with SuperDuper for you.

- We've also created a small Finder extension that lets you select one or more settings files and run them--select "Run SuperDuper! settings" in the Services menu. The location and name of this particular command may change in future betas. (FYI, it's a very simple Automator action and uses the aforementioned

sdautomatedcopycontroller.)

We now automatically mount the source and destination volumes during automated copies. Previously, we only mounted the destination. The details are managed by sdautomatedcopycontroller, so the behavior will work for your own runs as well.

Any volumes that were automatically mounted are automatically scheduled for unmount at the end of a successful copy. The unmounts are performed when SuperDuper quits (unless the unmount is vetoed by other applications such as Spotlight or Antivirus).

- There is no #5.

sdautomatedcopycontroller also automatically unlocks source or destination volumes if you have the volume password in the keychain.

If you have a locked APFS volume and you've scheduled it (or have otherwise set up an automated copy), you'll get two security prompts the first time through. The first authorizes sdautomatedcopycontroller to access your keychain. The second allows it to access the password for the volume.

To allow things to run automatically, click "Always allow" for both prompts. As you'd expect, once you've authorized for the keychain, other locked volumes will only prompt to access the volume password.

We've added Notification Center support for scheduled copies. If Growl is not present and running, we fall back to Notification Center. Our existing, long-term Growl support remains intact.

If you have need of more complicated notifications, we still suggest using Growl, since, in addition to supporting "forwarding" to the notification center, it can also be configured to send email and other handy things.

Plus, supporting other developers is cool. Growl is in the App Store and still works great. We support 3rd party developers and think you should kick them some dough, too! All of us work hard to make your life better.

Minor issue, but macOS used to clean up "local temporary files" (which were deleted on logout) by moving the file to the Trash. We used a local temporary file for Backup on Connect, and would get occasional questions from users asking why they would find a file we were using for that feature in the trash.

Well, no more. The file has been sent to the land of wind and ghosts.

That'll do for now: enjoy!

Download SuperDuper! 3.2 B2

Executive summary: sure, it's the Friday before Labor Day weekend, but there's a beta of SuperDuper for Mojave at the bottom of this (interesting?) post!

It Gets Worse

Back when OS X Lion (10.7) was released, the big marketing push was that iOS features were coming "Back to the Mac", after the (pretty stellar) Snow Leopard update that focused on stability, but didn't add much in the way of features.

Mojave (10.14) also focuses on stability and security. But in some ways, it takes an iOS "sandbox" approach to the task, and that makes things worse, not only for "traditional" users who use the Mac as a Mac (as opposed to a faster iPad-with-a-keyboard), but for regular applications as well.

Not Just Automation

Many more advanced Mac users employ AppleScript or Automator to automate complicated or repetitive tasks. Behind the scenes, many applications use Apple Events--which underlay AppleScript--to ask other applications, or parts of the system, to perform tasks for which they are designed.

A Simple Example

A really simple example is Xcode. There's a command in Xcode's File menu to Show in Finder.

When you choose that command, Xcode sends an Apple Event that asks Finder to open the folder where the file is, and to select that file. Pretty basic, and that type of thing has been in Mac applications since well before OS X.

In Beta 8 of Mojave, that action is considered unsafe. When selected, the system alarmingly prompts that "“Xcode” would like to control the application “Finder”." and asks the user if they want to allow it.

Now, there's no real explanation as to why this is alarming, and in this case, the user did ask to show the file in Finder, so they're likely to Allow it, and once done, they won't be prompted when Xcode asks Finder to do things.

A More Complex Example

Back in 2006. when we added scheduling to SuperDuper, we decided to do it in a way that was as user-extensible as possible. We designed and implemented an AppleScript interface, used that interface to run scheduled copies, and provided the schedule driver, "Copy Job", in source form, so users would have an example of how to script SuperDuper.

That's worked out well, but as of Mojave, the approach had to change because of these security prompts.

Wake Up, Time to Die

An AppleScript of any reasonable complexity needs to talk to many different parts of the system in order to do its thing: that is, after all, what it's designed for.

But those parts of the system aren't necessarily things a user would recognize.

For example, our schedule driver needs to talk to System Events, Finder and, of course, SuperDuper itself.

When a schedule starts, those prompts suddenly appear, referencing an invisible application called Copy Job. And while a user might recognize a prompt for SuperDuper, it's quite unlikely they'll know what System Events is, or why they should allow the action.

Worse, a typical schedule runs when the user isn't even present, and so the prompts go without response, and the events time out.

Worse still, a timeout (the system defaults to two minutes) doesn't re-prompt, but assumes the answer is "no".

And even worse yet, a negative response fundamentally breaks scheduling in a way users can't easily recover from. (In Beta 8. a command-line utility is the "solution", but asking the user to resort to an obscure Unix command in order to repair this is unreasonable.)

That's just one example. There are many others.

Reaching an Accommodation

Of course, this is not acceptable. We can't have everything break randomly (and confusingly) for users just because they've installed a new OS version with an ill-considered implementation detail.

Instead, we've worked around the problem.

Scheduling has been completely rewritten for the next version of SuperDuper. We're still using our scripting interface, but the schedule driver is now a command-line application that doesn't need to talk to other system services via AppleEvents to do the things it needs to do. It only needs to talk to SuperDuper, and since it's signed with the same developer certificate, it can do that without prompting. A link to the beta with this change, among others, is at the end of the post.

This does mean, unfortunately, that users who edited our schedule driver can't do that any more: our driver has to be signed, and thus can't be modified. (I'll have more on this in a future post.)

It's more than a bit ironic that an approach that avoids the prompting can do far more, silently, than the original ever could, but that's what happens when you use a 16-ton weight to hammer in a security nail.

When SuperDuper! is started, we've added a blocking prompt for Full Disk Access, which is required to copy your data in Mojave, and--if you're using Sleep or Shut Down--access to the aforementioned System Events, which is used to provide those features. Still ugly, but we've done what we can to minimize the prompts.

What a View

This should remind you of one thing: Windows Vista.

Back when Microsoft released Vista, they added a whole bunch of security prompts that proved to be one of worst ideas Microsoft ever had. And it didn't work. It annoyed users so much, and caused such a huge backlash that they backed off the approach, and got smarter about their prompting in later releases.

Perhaps Apple's marketing team needs to talk to engineering?

Those who ignore history...

Download SuperDuper! 3.2 Public Beta 1