You'd think, given I've written everything here, I'd learn to pay attention to what I've said in the past.

Let me explain. In v3.2.4, we started getting occasional reports of scheduled copies running more than once. Which seemed kind of crazy, because, well, our little time-based backup agent—sdbackupbytime—only runs once a minute, and I'd reviewed the code so many times. There was no chance of a logic error: it was only going to run these puppies one time.

But it was, sometimes, regardless. And one of the things I had to change to complete Smart Wake was to loop to stay active when there was a backup coming in the next minute. But the code was definitely right. Could that have caused this to happen?

(Spoiler alert after many code reviews, hand simulations and debugger step-throughs: NO.)

So if that code was right, why the heck were some users getting occasional multiple runs? And why wasn't it happening to us here at Shirt Pocket HQ?

Of course, anyone who reported any of these problems received an intermediate build that fixed them all. We didn't rush out a new update because the side effect was more copies rather than fewer.

Secondary Effects

Well, one thing we did in 3.2.4 was change what SuperDuper does when it starts.

Previously, in order to work around problems with Clean My Mac (which, for some reason, incorrectly disables our scheduling when a cleanup pass is run, much to my frustration), we changed SuperDuper to reload our LaunchAgents when it starts, in order to self-repair.

This worked fine, except when sdbackupbytime was waiting a minute to run another backup, or when sdbackuponmount was processing multiple drives. In that case, it could potentially kill the process waiting to complete its operation. So, in v3.2.4, we check to see if the version is different, and only perform the reload if the agent was updated.

The known problem with this is that it wouldn't fix Clean My Mac's disabling until the next update. But it also had a secondary effect: these occasional multiple runs. But why?

Well, believe it or not, it's because of ThrottleInterval. Again.

Readers may recall that launchd's ThrottleInterval doesn't really control how often a job might launch, "throttling" it to only once every n seconds. It actually forces a task to relaunch if it doesn't run for at least n seconds.

It does this even if a task succeeds, exits normally, and is set up to run, say, every minute.

sdbackupbytime is a pretty efficient little program, and takes but a fraction of a second to do what it needs to do. When it's done, it exits normally. But if it took less than 10 seconds to run, the system (sometimes) starts it again...and since it's in the same minute, it would run the same schedule a second time.

The previous behavior of SuperDuper masked this operation, because when it launched it killed the agent that had been re-launched: a secondary, unexpected effect. So the problem didn't show up until we stopped doing that.

And I didn't expect ThrottleInterval to apply to time-based agents, because you can set things to run down to every second, so why would it re-launch an agent that was going to have itself launched every minute? (It's not that I can't come up with reasons, it's just that those reasons are covered by other keys, like KeepAlive.)

Anyway, I changed sdbackupbytime to pointlessly sleep up to ThrottleInterval seconds if it was going to exit "too quickly", and the problem was solved...by doing something dumb.

Hey, you do what you have to do, you know? (And sometimes what you have to do is pay attention to your own damn posts.)

Queue'd

Another big thing we did was rework our AppleScript "queue" management to improve it. Which we did.

But we also broke something.

Basically, the queue maintains a semaphore that only allows one client at a time through to run copies using AppleScript. And we remove that client from the queue when its process exits.

The other thing we do is postpone quit when there are clients in the queue, to make sure it doesn't quit out from under someone who's waiting to take control.

In 3.2.4, we started getting reports that the "Shutdown on successful completion" command wasn't working, because SuperDuper wasn't quitting.

Basically, the process sending the Quit command was queued as a task trying to control us, and it never quit...so we deferred the quit until the queue drained, which never happened.

We fixed this and a few similar cases (people using keyboard managers or other things).

Leaving Things Out

As you might know, APFS supports "sparse files" which are, basically, files where "unwritten" data takes up no space on a drive. So, you might have a file that was preallocated with a size of 200GB, but if you only wrote to the first 1MB, it would only take up 1MB on the drive.

These types of files aren't used a lot, but they are used by Docker and VirtualBox, and we noticed that Docker and VirtualBox files were taking much longer to copy than we were comfortable with.

Our sparse file handling tried to copy a sparse file in a way that ensured it was taking a minimal amount of space. That meant we scanned every read block for zeros, and didn't write the sections of files that were 0 by seeking ahead from that point, and writing the non-zero part of the block.

The problem with this is that it takes a lot of time to scan every block. On top of that, there were some unusual situations where the OS would do...weird things...with certain types of seek operations, especially at the end of a file.

So, in 3.2.5, we've changed this so that rather than write a file "optimally", maximizing the number of holes, we write it "accurately". That is, we exactly replicate the sparse structure on the source. This speeds things up tremendously. For example, with a typical sparse docker image, the OS's low-level copyfile function takes 13 minutes to copy with full fidelity, rsync takes 3 minutes and doesn't provide full fidelity, whereas SuperDuper 3.2.5 takes 53 seconds and exactly replicates the source.

That's a win.

Don't Go Away Mad...

In Mojave 10.14.4 or so, we starting getting reports of an error unmounting the snapshot, after which the copy would fail.

I researched the situation, and in this unusual case, we'd ask the OS to eject the volume, it would say it wasn't able to, then we'd ask again (six times), and we'd get an error each time...because it was already unmounted.

So, it would fail to unmount something that it would then unmount. (Winning!)

That's been worked around in this update. (Actual winning!)

Improving the Terrible

As you've seen, Apple's process for granting Full Disk Access is awful. There's almost no guidance in the window—it's like they made it intentionally terrible (I think they did, to discourage people from doing it).

We'd hoped that they'd improve it, and while some small improvements have been made, it hasn't been enough. So, thanks to some work done and generously shared by Media Atelier, we now provide instructions and a draggable application proxy, overlaid on the original window. It's much, much better now.

Thanks again and a tip of the pocket to Pierre Bernard of Houdah Software and Stefan Fuerst of Media Atelier!

eSellerate Shutdown

Our long-time e-commerce provider, eSellerate, is shutting down as of 6/30. So, we had to move to a new "store".

After a long investigation, we've decided to move to Paddle, which—in our opinion—provides the best user experience of all the ones we tried.

Our new purchase process allows payment through more methods, including Apple Pay, and is simpler than before. And we've implemented a special URL scheme so your registration details can now be entered into SuperDuper from the receipt with a single click, which is cool, convenient, and helps to ensure they're correct.

Accomplishing this required quite a bit of additional engineering, including moving to a new serial number system, since eSellerate's was going away. We investigated a number of the existing solutions, and really didn't want to have the gigantically long keys they generated. So we developed our own.

We hope you like the new purchase process: thanks to the folks at Rogue Amoeba, Red Sweater Software, Bare Bones Software and Stand Alone—not to mention Thru-Hiker—for advice, examples and testing.

Note that this means versions of SuperDuper! older than 3.2.5 will not work with the new serial numbers (old serial numbers still work with the new SuperDuper). If you have a new serial number and you need to use it with an old version, please contact support.

(Note that this also means netTunes and launchTunes are no longer available for purchase. They'll be missed, by me at least.)

Various and Sundry

I also spent some time improving Smart Delete in this version; it now looks for files that have shrunk as candidates for pre-moval, and if it can't find any space, but we're writing to an APFS volume, I proactively thin any snapshots to free up space on the container.

All that means even fewer out of space errors. Hooray!

We also significantly improved our animations (which got weird during 10.13) by moving our custom animation code to Core Animation (wrapping ourselves in 2007's warm embrace) and fixed our most longstanding-but-surprisingly-hard-to-fix "stupid" bug: you can now tab between the fields in the Register... window. So, if you had long odds on that, contact your bookie: it's going to pay off big.

With a Bow

So there you go - the update is now available in-app, and of course has been released on the Shirt Pocket site. Download away!

On March 7, 2019, I unfortunately lost my Dad (which is why support has been a bit slow recently). I thought I'd post my eulogy for him here, as delivered, should anyone care. He was a good man, and will be missed.

Good morning, everyone. I’m David Nanian, up here representing my Mom and my brothers, John and Paul. Thanks so much for coming.

All of us here knew my Dad and were, without question, better off for it.

He’d greet you, friend or soon-to-be-friend, with a smile and a twinkle in his eye because, well, that was the kind of person he was. He exuded warmth and kindness. It was obvious as soon as you saw him.

And so we’re here today to celebrate him. To celebrate his achievements, certainly, because he was a great doctor. But also to celebrate his … his goodness. He was, truly, a good man.

It took my brothers and me a while to realize this. Like most kids, we went through the typical phases as we matured, where Dad went from a benevolent, God-like presence when we were kids, to a capricious one when we were teens… but that was mostly about us, not him.

Dad worked hard. Mom was a constant, grounding presence at home, but Dad’s typical day started early, and he usually wasn’t home until 8.

Dad’s sunny optimism and caring nature helped to heal many patients, but it took a lot out of him, and when he did come home, he was bone tired. After eating he’d usually fall asleep in his chair in front of the TV—only to awaken if we tried to change the channel. My brothers and I even tried slowly ramping down the volume, switching the channel, and then ramping it back up…it would sometimes work, but when it didn’t he’d wake up with a start, hopping mad.

He’d work hard, and would cover other doctors’ shifts on holidays, so that he’d have larger blocks of time for vacation with the family. And when that time came, he was a sometimes exhausting whirlwind of energy, trying to cram in eleven-something months of missed family time into a few focused weeks…something he’d be looking forward to with anticipation, while we were a bit more apprehensive.

Where would the new “shortcut” on the ride to Kennebunkport take us this time? Was Noonan’s Lobster Hut 3 minutes or 3 hours away?

It was always an adventure.

When I was in my teens, Dad gave me a job mounting cardiograms. I think all three of us did this work at one point or another. It gave us a chance, not just to earn a little money to fritter away on comics or whatever, but also to see Dad at work. There, we could see how admired he was by his colleagues, staff and patients, and I began to see him not just as the “Dad” presence he was during our childhood, but as a real person.

During this time (and even today: Mom recently had this happen in an elevator when Dad was in the hospital), people would constantly stop me in the hallways and tunnels of Rhode Island Hospital as I was doing an errand for him—typically, getting him a Snickers bar—and they’d tell me what a great person he was. How he’d helped take care of their parent, or had a terrific sense of humor, or how quick he was with a kind word or helpful comment.

Later, during my college years, my friends—after meeting my parents—would constantly tell me how awesome my Mom and Dad were. How normal. How much they wish their own parents were like mine.

Which was weird at the time, but, I mean, they were right. I have great parents. I had a great Dad.

So I wanted to tell three little stories about why that was, from when I was old enough to understand.

—

Dad’s enthusiasm and optimism were positive traits, but they occasionally got him into some trouble.

I’d recently graduated from College, and that winter our family went on a ski vacation to Val d’Isere.

Dad was absolutely dying to try Raclette—which, if you don’t know, is a dish popular in that region where a wheel of cheese is heated at the table and scraped onto plates that have potatoes, pickles, vegetables, meats. It’s delicious, but quite filling.

So, we went to a small, family restaurant, and they brought out the various parts of the dish—there were quite a few plates of the traditional items—along with a big half-wheel of cheese and its heating machine.

Now, normally, that 8 pound chunk would last the restaurant a long time. It seemed super clear to the rest of us, just from the size, that there was no way it was “our cheese”. But Dad was absolutely convinced we were supposed to finish the whole thing. To do otherwise was to insult our hosts.

And so, to the obvious horror of the owners watching from the kitchen, Dad—in an attempt to not be ungrateful, to not be the ugly American—tried to finish the cheese.

The rest of us tapped out, but more plates came as Dad—never one to give up—desperately tried to do the right thing.

In the end, much to his chagrin, and the owner’s obvious relief, he couldn’t. Dad apologized for not being able to finish (I think, this is where my brothers and I snarkily told him to tell the waitress “Je suis un gros homme”), and they replied with something along the lines of “That’s quite all right”—but Dad’s attempt to conquer the wheel with such gusto, for the right-yet-wrong reason, even though we could all see the effort was doomed, was human and funny and endearing.

—

He loved to sail. We had a small boat, a 22-foot Sea Sprite named Systolee, and we’d sail it for fun, but Dad also participated in the East Greenwich Yacht Club’s Sea Sprite racing series.

Season after season, we’d come in last, or second to last, but he had a great time doing it, holding the tiller while wearing his floppy hat, telling us—the crew—to do this or that with the sails.

I’d had some success one summer racing Sunfish, and the next year, Dad let me skipper the Systolee in the race series, with him and Mom as crew.

I didn’t make it easy. It was important to be aggressive, especially at the start of a race, and both Mom and Dad would follow my various orders nervously as we came within inches of other boats, trying to hit the line exactly as the starting gun went off.

But he let me do it. He watched me as, one day, I climbed the mast of the pitching boat in the middle of a race in a stormy bay to retrieve a lost halyard—admittedly a crazy thing to do—despite his fear of heights, since he knew abandoning the race would be end up being my failure, not his.

And that season, we came in second overall. But more than the trophy and the opportunity, he gave me the gift of trusting me, and treating me as an equal, week after week. Of allowing me to be better than him at something he loved.

—

Finally, Dad had some health challenges later in life. At one point, he came down with some weird peripheral neuropathy that was incorrectly diagnosed as Lou Gehrig’s Disease.

Fortunately, more testing in Boston showed that it wasn’t ALS, but some sort of neuropathy, and while it didn’t take his life, it did take away much of his balance, and with that, it took away skiing.

Dad loved skiing—and missed a real career writing overly positive ski condition reports for snow-challenged Eastern ski areas—and from my earliest days skiing with him it was clear he wanted nothing more than to be the oldest skier on the slopes, teaching his grandkids to love it the way he did.

Possibly, he just wanted to be old enough to be able to ski for free. He did love a bargain.

Anyway.

He didn’t let his neuropathy hold him back—of course he didn’t—and started traveling with Mom all over the world, and they’d regale us with the stories of the places they’d been, the classes they took…the number of bridge hands they won (or lost). He especially loved the safari they went on in Tanzania, and brought back many great pictures of the landscape and wildlife they’d seen.

He loved learning new things, and had more time to read, to make rum raisin ice cream (the secret, he’d tell us, is to soak the raisins in the rum…overnight!), and to enjoy the Cape with Mom. He was able to relax and play with his grandkids, and it was great to see him entertain my friends’ kids as well.

When he got his cancer diagnosis, he took the train to Boston to meet with his doctors, learned about Uber and Lyft, and was just fiercely determined, independent and optimistic. To illustrate his attitude, he just had a cataract repaired and he had the other one scheduled to be fixed in a few weeks.

During this time, the doctors and staff at Mass General would tell us that he was their hero. Not, I think, for facing his disease with courage and determination, although he did do that. But because he was 88, 89, 90, and full of life, of humor, and of love.

And of course, we all saw that too. Because he was our hero.

—

The last time I was with Dad, just a few weeks ago, he was clearly feeling poorly, and while he kept a brave and cheerful facade he also, with a voice tinged with regret, wanted to make sure that I knew how proud he was of John, and Paul, and me. And how he felt badly that he never told us that enough…because he didn’t want to spoil us.

You know, books and movies through the centuries constantly depict sons and daughters desperate to get the slightest bit of approval from their dads.

For us, though, he took clear delight in what we all did. He looked with admiration and approval at John’s beautiful photography, Paul’s Peace Corps service and ultralight outdoor kit business built from his travel and experience hiking the Appalachian trail, my crazy computer stuff.

And so I told him, as clearly as I could, that it was never in doubt.

Of course we knew.

Just as each and every one of you know how much he cared for you. Whether you were part of his family, a patient, or a friend, he made it clear. He was truly happy to know you. You were truly loved.

And now he’s gone, and the world is a little bit darker because of it. But we all have, within us, a memory of him. A memory of his kindness, his boundless optimism, his love, his zest for life.

And with that in our hearts, we can look out, perhaps at the snow outside: dirty brown, with bare patches, rocks, ice…ice covered rocks. You know, if you’re an Eastern skier: it’s “machine loosened frozen granular”.

Imagine him there, with his arm around your shoulders, and a big smile on his face, and see it the way he’d make you see it.

See that the snow condition’s fantastic. It’s always fantastic. Life is terrific. Every day.

Remember that, greet the day with a mischievous smile and an open heart, and think of him.

…But Sometimes You Need UI Monday, November 12, 2018

As much as I want to keep SuperDuper!'s UI as spare as possible, there are times when there's no way around adding things.

For years, I've been trying to come up with a clever, transparent way of dealing with missing drives. There's obvious tension between the reasons this can happen:

- A user is rotating drives, and wants two schedules, one for each drive

- When away from the desktop, the user may not have the backup drive available

- A drive is actually unavailable due to failure

- A drive is unavailable because it wasn't plugged in

While the first two cases are "I meant to do that", the latter two are not. But unfortunately, there's insufficient context to be able to determine which case we're in.

Getting to "Yes"

That didn't stop me from trying to derive the context:

I could look at all the schedules that are there, see whether they were trying to run the same backup to different destinations, and look at when they'd last run... and if the last time was longer than a certain amount, I could prompt. (This is the Time Machine method, basically.)

But that still assumes that the user is OK with a silent failure for that period of time...which would leave their data vulnerable with no notification.

I could try to figure out, from context (network name, IP address), what the typical "at rest" configuration is, and ignore the error when the context wasn't typical.

But that makes a lot of assumptions about a "normal" context, and again leaves user data vulnerable. Plus, a laptop moves around a lot: is working on the couch different than at your desk, and how would you really know, in the same domicile? What about taking notes during a meeting?

- How do you know the difference between this and being away from the connection?

- Ditto.

So after spinning my wheels over and over again, the end result was: it's probably not possible. But the user knows what they want. You just have to let them tell you.

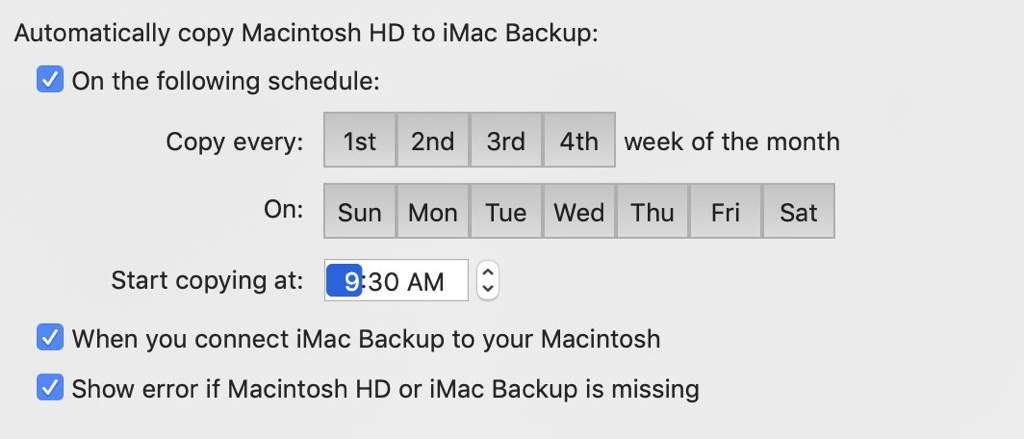

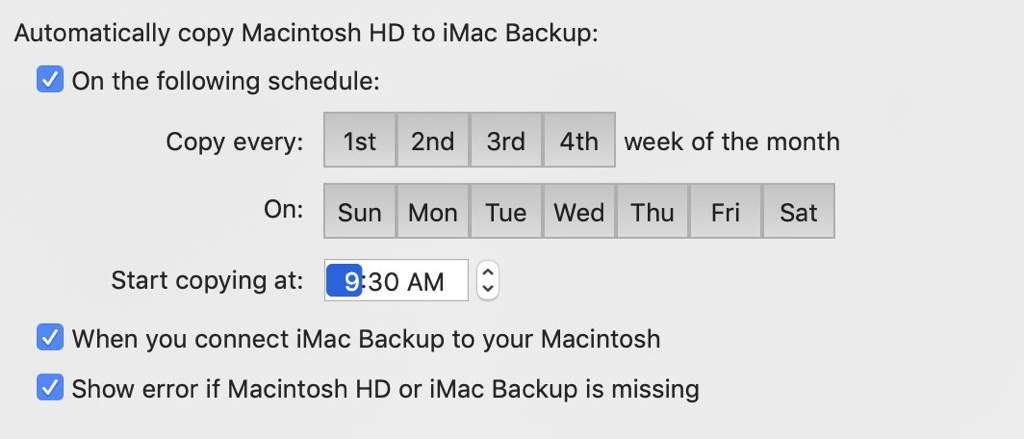

And so:

The schedule sheet now lets you indicate you're OK with a drive being missing, and that we shouldn't give an error.

In that case, SuperDuper won't even launch: there'll just be blissful silence.

The default is checked, and you shouldn't uncheck it unless you're sure that's what you want...but now, at least, you can tell SuperDuper what you want, and it'll do it.

And who knows? Maybe in the future we'll also warn you that it's been a while.

Maybe.

He's Dead, Jim

If you're not interested in technical deep-dives, or the details of how SuperDuper does what it does, you can skip to the next section...although you might find this interesting anyway!

In Practices Make Perfect (Backups), I discuss how simpler, more direct backups are inherently more reliable than using images or networks.

That goes for applications as well. The more complex they get, the more chances there are for things to go wrong.

But, as above, sometimes you have no choice. In the case of scheduling, things are broken down into little programs (LaunchAgents) that implement the various parts of the scheduling operation:

sdbackupbytime manages time-based schedulessdbackuponmount handles backups that occur when volumes are connected

Each of those use sdautomatedcopycontroller to manage the copy, and that, in turn, talks to SuperDuper to perform the copy itself.

As discussed previously, sdautomatedcopycontroller talks to SuperDuper via AppleEvents, using a documented API: the same one available to you. (You can even use sdautomatedcopycontroller yourself: see this blog post.)

Frustratingly, we've been seeing occasional errors during automated copies. These were previously timeout errors (-1712), but after working around those, we started seeing the occasional "bad descriptor" errors (-1701) which would result in a skipped scheduled copy.

I've spent most of the last few weeks figuring out what's going on here, running pretty extensive stress tests (with >1600 schedules all fighting to run at once; a crazy case no one would ever run) to try to replicate the issue and—with luck—fix it.

Since sdautomatedcopycontroller talks to SuperDuper, it needs to know when it's running. Otherwise, any attempts to talk to it will fail. Apple's Scripting Bridge facilitates that communication, and the scripted program has an isRunning property you can check to make sure no one quit the application you were talking to.

Well, the first thing I found is this: isRunning has a bug. It will sometimes say "the application isn't running" when it plainly is, especially when a number of external programs are talking to the same application. The Scripting Bridge isn't open source, so I don't know what the bug actually is (not that I especially want to debug Apple's code), but I do know it has one: it looks like it's got a very short timeout on certain events it must be sending.

When that short timeout (way under the 2-minute default event timeout) happens, we would get the -1712 and -1701 errors...and we'd skip the copy, because we were told the application had quit.

To work around that, I'm no longer using isRunning to determine whether SuperDuper is still running. Instead, I observe terminated in NSRunningApplication...which, frankly, is what I thought Scripting Bridge was doing.

That didn't entirely eliminate the problems, but it helped. In addition, I trap the two errors, check to see what they're actually doing, and (when possible) return values that basically have the call run again, which works around additional problems in the Scripting Bridge. With those changes, and some optimizations on the application side (pro tip: even if you already know the pid, creating an NSRunningApplication is quite slow), the problem looks to be completely resolved, even in the case where thousands of instances of sdautomatedcopycontroller are waiting to do their thing while other copies are executing.

Time's Up

Clever readers (Hi, Nathan!) recognized that there was a failure case in Smart Wake. Basically, if the machine fell asleep in the 59 seconds before the scheduled copy time, the copy wouldn't run, because the wake event was canceled.

This was a known-but-unlikely issue that I didn't have time to fix and test before release (we had to rush out version 3.2.3 due to the Summer Time bug). I had time to complete the implementation in this version, so it's fixed in 3.2.4.

And with that, it's your turn:

Download SuperDuper! 3.2.4

The Best UI is No UI Saturday, October 27, 2018

In v3.2.2 of SuperDuper!, I introduced the new Auto Wake feature. It's whole reason for being is to wake the computer when the time comes for a backup to run.

Simple enough. No UI beyond the regular scheduling interface. The whole thing is managed for you.

But What About...?

I expected some pushback but, well, almost none! A total of three users had an issue with the automatic wake, in similar situations.

Basically, these users kept their systems on but their screens off. Rather inelegantly, even when the system is already awake, a wake event always turns the screen on, which would either wake the user in one case, or not turn the screen off again in the other due to other system issues.

It's always something.

Of course, they wanted some sort of "don't wake" option...which I really didn't want to do. Every option, every choice, every button adds cognitive load to a UI, which inevitably confuses users, causes support questions, and degrades the overall experience.

Sometimes, of course, you need a choice: it can't be helped. But if there's any way to solve a problem—even a minor one—without any UI impact, it's almost always the better way to go.

Forget the "almost": it's always the better way to go. Just do the right thing and don't call attention to it.

That's the "magic" part of a program that just feels right.

Proving a Negative

You can't tell a wake event to not wake if the system is already awake, because that's not how wake events work: they're either set or they're not set, and have no conditional element.

And I mentioned above, they're not smart enough to know the system is already awake.

Apple, of course, has its own "dark wake" feature, used by Power Nap. Dark wake, as is suggested by the name, wakes a system without turning on the screen. However, it's not available to 3rd party applications and has task-specific limitations that tease but do not deliver a solution here.

And you can't put up a black screen on wake, or adjust the brightness, because it's too late by the time the wake happens.

So there was no official way to make the wake not wake the screen available to 3rd parties.

In fact, the only way to wake is to not wake at all...but that would require an option. And there was no way I was adding an option. Not. Going. To. Happen.

Smart! Wake!

Somehow, I had to make it smarter. And so, after some working through the various possibilities... announcing... Smart Wake! (Because there's nothing like driving a word like "Smart" into the ground! At least I didn't InterCap.)

For those who are interested, here's how it works:

- Every minute, our regular "time" backup agent looks to see if it has something to do

- Once that's done, it gathers all the various potential schedules and figures out the one that will run next

- It cancels any previous wake event we've created, and sets up a wake event for any event more than one minute into the future

Of course, describing it seems simple: it always is, once you figure it out. Implementing it wasn't hard, because it's built on the work that was already done in 3.2.2 that manages a single wake for all the potential events. Basically, if we are running a minute before the backup is scheduled to run, we assume we're also going to be running a minute later, and cancel any pending wakes for that time. So, if we have an event for 3am, at 2:59am we cancel that wake if we're already running.

That ensures that a system that's already awake will not wake the screen, whereas a system that's sleeping will wake as expected.

Fixes and Improvements

We've also fixed some other things:

- Due to a pretty egregious bug in Sierra's power manager (Radar 45209004 for any Apple Friends out there, although it's only 10.12 and I'm sure 10.12 is not getting fixed now), multiple alarms could be created with the wrong tag.

- Conversion to APFS on Fusion drives can create completely invalid "symlinks to nowhere". We now put a warning in the log and continue.

- Every so often, users were getting timeout errors (-1712) on schedules

- Due to a stupid error regarding Summer Time/Daylight Savings comparisons, sdbackupbytime was using 100% CPU the day before a time change

- General scheduling improvements

That's it. Enjoy!

Download SuperDuper! 3.2.3

Waking the Neighbors Tuesday, October 09, 2018

I'm not going to say Disk Full errors never happen any more. But I am going to say that in the weeks since we released SuperDuper! 3.2, there's been a gigantic drop in their frequency. It's really awesome, both for users (who have fewer failed copies) and for me (since I have fewer support emails). And it's a big improvement in safety, too.

I've said this kind of thing before, but these are the kinds of improvements I love: a basically invisible change that results in a meaningful improvement in people's lives, even when they might not notice things are better.

We want to be invisible. We want things to just work.

You Snooze You Lose (Data)

And along those lines, I'm happy to announce SuperDuper! 3.2.2!

If you've ever read the User's Guide (don't feel bad, I know you haven't), you likely know that we've always had you set a "wake" event in the Energy Saver preference pane. Before SuperDuper 3.1.7, you'd want to set it to a minute before the copy. After, due to changes in macOS 10.13.4, for the same time.

I never liked the fact that you had to do this yourself. In fact, to put it bluntly, it kinda sucked, not only because you had to remember to do it, but because you could only have a single wake event set. That means that multiple copies on different schedules wouldn't necessarily work the way you'd want, because you couldn't guarantee things would be awake.

This actually mattered less back when the system would wake really often overnight, and your backups would run even if you forgot to set the wake event. But Apple's gotten better at keeping your Mac asleep...which also means you have a higher potential to miss backups scheduled when your Mac is sleeping.

That's bad. We don't want you to miss backups.

And now you won't. SuperDuper! 3.2.2 new Auto Wake feature manages it for you, and wakes your system before the scheduled copy. If you have an existing wake event set, you can turn it off - all this is handled for you, regardless of the number of backups you have scheduled, what times they're scheduled for, and what frequency. We'll only wake when we're really going to run a copy.

Combined with our notification center support, you'll know when a successful backup has taken place overnight.

Various Fixes and Improvements

We've made some improvements to Smart Delete in this release. There were a few extremely full disk situations that actually run out of space when creating directories or symlinks (basically, items with no actually data "size"). We weren't engaging Smart Delete in those situations, and now we do.

There was also an overly aggressive error check that was rejecting Smart Delete when it shouldn't. That was fixed, so Smart Delete will work in even more situations.

We've also made some improvements in the way scheduled copies detect failed copies: in 3.2/3.2.1 we'd had some users report they were getting notifications that the backup failed when they actually worked. We're pretty sure we figured out why, and you should always get a notification of success if the backup was successful.

Download Away!

SuperDuper! 3.2.2 is now available as an automatic upgrade, or you can download here:

SuperDuper! 3.2.2

Enjoy, and thanks -- as always -- for your support.

Optional Reading

Here's a great example of Smart Delete doing its thing. I've edited out the boring... well, more boring parts.

But I'll tell you: for backups, I love boring. Boring means it just worked and didn't make a fuss about it. Boring means we did what was needed and handled stuff for you, safely, without your intervention.

That's better than the alternative. You definitely don't want backups to be exciting!

| 10:49:43 AM | Info | SuperDuper!, 3.2.1 (114), path: /Applications/SuperDuper!.app, Mac OS 10.13.6 build 17G65 (i386)

| 10:49:43 AM | Info | Started on Sat, Sep 29, 2018 at 10:49 AM

| 10:49:43 AM | Info | Source Volume: "Backup" mount: '/' device: disk1s1 file system: 'APFS' protocol: PCI OS: 10.13.6 capacity: 250.79 GB available: 99.43 GB files: 789,433 directories: 186,693

| 10:49:43 AM | Info | Target Volume: "Backup2" mount: '/Volumes/Backup2' device: disk2s2 file system: 'Mac OS Extended (Journaled)' protocol: USB OS: 10.13.6 capacity: 165 GB available: 32.3 MB files: 804,881 directories: 186,208

| 10:49:43 AM | Info | Copy Mode : Smart Update

10:49:43 AM | Info | Copy Script : Backup - all files.dset

.

.

.

| 10:57:35 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-19AN46 of size 2105344 due to error: 28, No space left on device

| 10:57:35 AM | Info | Attempting to reclaim at least 2.1 MB with Smart Delete.

| 10:57:35 AM | Info | Reclaimed 2.2 MB with Smart Delete. Total available is now 2.7 MB.

| 10:57:40 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-GJXeKR of size 1957888 due to error: 28, No space left on device

| 10:57:40 AM | Info | Attempting to reclaim at least 2 MB with Smart Delete.

| 10:57:40 AM | Info | Reclaimed 3.1 MB with Smart Delete. Total available is now 3.7 MB.

| 10:57:44 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-yr8G8w of size 1024000 due to error: 28, No space left on device

| 10:57:44 AM | Info | Attempting to reclaim at least 1 MB with Smart Delete.

| 10:57:44 AM | Info | Reclaimed 1.3 MB with Smart Delete. Total available is now 1.5 MB.

| 10:57:48 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-Yd2AYS of size 1531904 due to error: 28, No space left on device

| 10:57:48 AM | Info | Attempting to reclaim at least 1.5 MB with Smart Delete.

| 10:57:48 AM | Info | Reclaimed 2.2 MB with Smart Delete. Total available is now 2.4 MB.

| 10:57:51 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-lYyQ8C of size 925696 due to error: 28, No space left on device

| 10:57:51 AM | Info | Attempting to reclaim at least 926 KB with Smart Delete.

| 10:57:51 AM | Info | Reclaimed 1.2 MB with Smart Delete. Total available is now 2.1 MB.

| 10:57:55 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/T/com.apple.Safari/WebKit/MediaCache/CachedMedia-02P2cr of size 1515520 due to error: 28, No space left on device

| 10:57:55 AM | Info | Attempting to reclaim at least 1.5 MB with Smart Delete.

| 10:57:55 AM | Info | Reclaimed 2.6 MB with Smart Delete. Total available is now 3.7 MB.

.

.

.

| 10:57:59 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/folders/y3/9l0l08x93k5bpj5stfkmh68w0000gn/0/com.apple.dock.launchpad/db/db-wal of size 2954072 due to error: 28, No space left on device

| 10:57:59 AM | Info | Attempting to reclaim at least 3 MB with Smart Delete.

| 10:57:59 AM | Info | Reclaimed 3.6 MB with Smart Delete. Total available is now 6.5 MB.

.

.

.

| 10:58:08 AM | Info | Unable to preallocate target file /Volumes/Backup2/private/var/db/uuidtext/dsc/C80963E3871231D996884D4A99B15090 of size 45483854 due to error: 28, No space left on device

| 10:58:08 AM | Info | Attempting to reclaim at least 45.5 MB with Smart Delete.

| 10:58:08 AM | Info | Reclaimed 73.8 MB with Smart Delete. Total available is now 76.2 MB.

.

.

.

| 11:24:38 AM | Info | Evaluated 1003310 items occupying 144.33 GB (186382 directories, 793683 files, 23245 symlinks)

| 11:24:38 AM | Info | Copied 76790 items totaling 12.91 GB (185217 directories, 76156 files, 634 symlinks)

| 11:24:38 AM | Info | Cloned 140.11 GB of data in 2089 seconds at an effective transfer rate of 67.07 MB/s

.

.

.

| 11:31:42 AM | Info | Copy complete.

Woo! So many potential disk full situations...and success!

Smarty Pants Monday, September 24, 2018

Executive Summary

SuperDuper 3.2 is now available. It includes

In the Less Smart Days of Old(e)

Since the SuperDuper!'s first release, we've had Smart Update, which speeds up copying by quickly evaluating a drive on the fly, copying and deleting where appropriate. It does this in one pass for speed and efficiency. Works great.

However, there's a small downside to this approach: if your disk is relatively full, and a change is made that could temporarily fill the disk during processing, even though the final result would fit, we're trigger a disk full error, and stop.

Recovery typically involved doing an Erase, then copy backup, which took time and was riskier than we'd like.

Safety First (and second)

There are some subtleties in the way Smart Update is done that can aggravate this situation -- but for a good cause.

While we don't "leave all the deletions to the end", as some have suggested (usually via a peeved support email), we consciously delete files as late as is practical: what we call "post-traversal". So, in a depth-first copy, we clean up as we "pop" back up the directory tree.

In human (as opposed to developer) terms, that means when we're about to leave a folder, we tidy it up, removing anything that shouldn't be there.

Why do we do it this way?

Well, when users make mistakes, we want to give them the best chance of recovery with a data salvaging tool. By copying before deleting at a given level, we don't overwrite them with new data as quickly. So, in an emergency, it's much easier for a data salvaging tool to get the files back.

The downside, though, is a potential for disk full errors when there's not much free space on a drive.

Smart Delete

Enter Smart Delete!

This is something we've been thinking about and working on for a while. The problem has always been balancing safety with convenience. But we've finally come up with a idea (and implementation) that works really well.

Basically, if we hit a disk full error, we "peek" ahead and clean things up before Smart Update gets there, just enough so it can do what it needs to do. Once we have the space, Smart Delete stops and allows the regular Smart Update to do its thing.

Smart Update and Smart Delete work hand-in-hand to minimize disk full errors while maximizing speed and safety, with no significant speed penalty.

Everyone Wins!

So there you go: another completely "invisible" feature that improves SuperDuper! in significant ways that you don't have to think about...or even notice. You'll just see (or, rather, not see) fewer failures in more "extreme" copies.

This is especially useful for Photographers and others who typically deal with large data files, and who rename or move huge folders of content. Whereas before those might fill a drive, now the copy will succeed.

Mojave Managed

We're also supporting Mojave in 3.2 with one small caveat: for the moment, we've opted out of Dark Mode. We just didn't have enough time to finish our Dark Mode implementation, didn't like what we had, and rather than delay things, decided to keep it in the lab for more testing and refinement. It'll be in a future update.

More Surprises in Store

We've got more things planned for the future, of course, so thanks for using SuperDuper! -- we really appreciate each and every one of you.

Enjoy the new version, and let us know if you have any questions!

Download SuperDuper! 3.2

3.2 B3: The Revenge! Wednesday, September 12, 2018

(OK, yeah, I should have used "The Revenge" for B4. Stop being such a stickler.)

Announcing SuperDuper 3.2 B3: a cavalcade of unnoticeable changes!

The march towards Mojave continues, and with the SAE (September Apple Event) happening today, I figured we'd release a beta with a bunch of polish that you may or may not notice.

But First...Something Technical!

As I've mentioned in previous posts, we've rewritten our scheduling, moving away from AppleScript to Swift, to avoid the various security prompts that were added to Mojave when doing pretty basic things.

Initially, I followed the basic structure of what I'd done before, effectively implementing a fully functional "proof of concept" to make sure it was going to do what it needed to do, without any downside.

In this Beta, I've moved past the original logic, and have taken advantage of capabilities that weren't possible, or weren't efficient, in AppleScript.

For example: the previously mentioned com.shirtpocket.lastVolumeList.plist was a file that kept track of the list of volumes mounted on the system, generated by sdbackuponmount at login. When a new mount occurred, or when the /Volumes folder changed, launchd would run sdbackuponmount again. It'd get a list of current volumes, compare that to the list of previous volumes, run the appropriate schedules for any new volumes, update com.shirtpocket.lastVolumeList.plist and quit.

This all made sense in AppleScript: the only way to find out about new volumes was to poll, and polling is terrible, so we used launchd to do it intelligently, and kept state in a file. I kept the approach in the rewritten version at first.

But...Why?

When I reworked things to properly handle ThrottleInterval, I initially took this same approach and kept checking for new volumes for 10 seconds, with a sleep in between. I wrote up the blog post to document ThrottleInterval for other developers, and posted it.

That was OK, and worked fine, but also bugged me. Polling is bad. Even slow polling is bad.

So, I spent a while reworking things to block, and use semaphores, and mount notifications to release the semaphore which checked the disk list, adding more stuff to deal with the complex control flow...

...and then, looking at what I had done, I realized I was being a complete and utter fool.

Not by trying to avoid polling. But by not doing this the "right way". The solution was staring me right in the face.

Block-head

Thing is, volume notifications are built into Workspace, and always have been. Those couldn't be used in AppleScript, but they're right there for use in Objective-C or Swift.

So all I had to do was subscribe to those notifications, block waiting for them to happen, and when one came in, react to it. No need to quit, since it's no longer polling at all. And no state file, because it's no longer needed: the notification itself says what volume was mounted.

It's been said many times: if you're writing a lot of code to accomplish something simple, you're not using the Frameworks properly.

Indeed.

There really is nothing much more satisfying than taking code that's become overly complicated and deleting most of it. The new approach is simpler, cleaner, faster and more reliable. All good things.

Download

That change is in there, along with a bunch more. You probably won't notice any big differences, but they're there and they make things better.

Download SuperDuper! 3.2 B3

Warning: this is a technical post, put here in the hopes that it'll help someone else someday.

We've had a problem over the years that our Backup on Connect LaunchAgent produces a ton of logging after a drive is attached and a copy is running. The logging looks something like:

9/2/18 8:00:11.182 AM com.apple.xpc.launchd[1]: (sdbackuponmount) Service only ran for 0 seconds. Pushing respawn out by 60 seconds.

Back when we originally noticed the problem, over 5 years ago, we "fixed" it by adjusting ThrottleInterval to 0 (found experimentally at the time). It had no negative effects, but the problem came back later and I never could understand why...certainly, it didn't make sense based on the man page, which says:

ThrottleInterval <integer>

This key lets one override the default throttling policy imposed on jobs by launchd. The value is in seconds, and by default, jobs will not be spawned more than once every 10 seconds. The principle behind this is that jobs should linger around just in case they are needed again in the near future. This not only reduces the latency of responses, but it encourages developers to amortize the cost of program invocation.

So. That implies that the jobs won't be spawned more often than every n seconds. OK, not a problem! Our agent processes the mounts changes quickly, launches the backups if needed and quits. That seemed sensible--get in, do your thing quickly, and get out. We didn't respawn the jobs, and processed all of the potential intervening mounts and unmounts that might happen in a 10-second "throttled" respawn.

It should have been fine... but wasn't.

The only thing I could come up with was that there must be a weird bug in WatchPaths where under some conditions, it would trigger on writes to child folders, even though it was documented not to. I couldn't figure out how to get around it, so we just put up with the logging.

But that wasn't the problem. The problem is what the man page isn't saying, but is implied in the last part: "jobs should linger around just in case they are needed again" is the key.

Basically, the job must run for at least as long as the ThrottleInterval is set to (default = 10 seconds). If it doesn't run for that long, it respawns the job, adjusted by a certain amount of time, even when the condition isn't triggered again.

So, in our case, we'd do our thing quickly and quit. But we didn't run for the minimum amount of time, and that caused the logging. launchd would then respawn us. We wouldn't have anything to do, so we'd quit quickly again, repeating the cycle.

Setting ThrottleInterval to 0 worked, when that was allowed, because we'd run for more than 0 seconds, so we wouldn't respawn. But when they started disallowing it ("you're not that important")...boom.

Once I figured out what the deal was, it was an easy enough fix. The new agent runs for the full, default, 10-second ThrottleInterval. Rather than quitting immediately after processing the mounts, it sleeps for a second and processes them again. It continues doing this until it's been running for 10 seconds, then quits.

With that change, the logging has stopped, and a long mystery has been solved.

This'll be in the next beta. Yay!

Technical Update! Thursday, September 06, 2018

SuperDuper! 3.2 B1 was well received. We literally had no bugs reported against it, which was pretty gratifying.

So, let's repeat that with SuperDuper! 3.2 B2! (There's a download link at the bottom of this post.)

Remember - SuperDuper! 3.2 runs with macOS 10.10 and later, and has improvements for every user, not just those using Mojave.

Here are some technical things that you might not immediately notice:

If you're running SuperDuper! under Mojave, you need to add it to Full Disk Access. SuperDuper! will prompt you and refuse to run until this permission has been granted.

Due to the nature of Full Disk Access, it has to be enabled before SuperDuper is launched--that's why we don't wait for you to add it and automatically proceed.

As I explained in the last post, we've completely rewritten our scheduling so it's no longer in AppleScript. We've split that into a number of parts, one of which can be used by you from AppleScript, Automator, shell script--whatever--to automatically perform a copy using saved SuperDuper settings.

In case you didn't realize it: copy settings, which include the source and destination drives, the copy script and all the options, plus the log from when it was run, can be saved using the File menu, and you can put them anywhere you'd like.

The command line tool that runs settings is called sdautomatedcopycontroller (so catchy!) and is in our bundle. For convenience, there's a symlink to it available in ~/Library/Application Support/SuperDuper!/Scheduled Copies, and we automatically update that symlink if you move SuperDuper.

The command takes one or more settings files as parameters (either as Unix paths or file:// URLs), and handles all the details needed to run SuperDuper! automatically. If there's a copy in progress, it waits until SuperDuper! is available. Any number of these can be active, so you could throw 20 of them in the background, supply 20 files on the command line: it's up to you. sdautomatedcopycontroller manages the details of interacting with SuperDuper for you.

- We've also created a small Finder extension that lets you select one or more settings files and run them--select "Run SuperDuper! settings" in the Services menu. The location and name of this particular command may change in future betas. (FYI, it's a very simple Automator action and uses the aforementioned

sdautomatedcopycontroller.)

We now automatically mount the source and destination volumes during automated copies. Previously, we only mounted the destination. The details are managed by sdautomatedcopycontroller, so the behavior will work for your own runs as well.

Any volumes that were automatically mounted are automatically scheduled for unmount at the end of a successful copy. The unmounts are performed when SuperDuper quits (unless the unmount is vetoed by other applications such as Spotlight or Antivirus).

- There is no #5.

sdautomatedcopycontroller also automatically unlocks source or destination volumes if you have the volume password in the keychain.

If you have a locked APFS volume and you've scheduled it (or have otherwise set up an automated copy), you'll get two security prompts the first time through. The first authorizes sdautomatedcopycontroller to access your keychain. The second allows it to access the password for the volume.

To allow things to run automatically, click "Always allow" for both prompts. As you'd expect, once you've authorized for the keychain, other locked volumes will only prompt to access the volume password.

We've added Notification Center support for scheduled copies. If Growl is not present and running, we fall back to Notification Center. Our existing, long-term Growl support remains intact.

If you have need of more complicated notifications, we still suggest using Growl, since, in addition to supporting "forwarding" to the notification center, it can also be configured to send email and other handy things.

Plus, supporting other developers is cool. Growl is in the App Store and still works great. We support 3rd party developers and think you should kick them some dough, too! All of us work hard to make your life better.

Minor issue, but macOS used to clean up "local temporary files" (which were deleted on logout) by moving the file to the Trash. We used a local temporary file for Backup on Connect, and would get occasional questions from users asking why they would find a file we were using for that feature in the trash.

Well, no more. The file has been sent to the land of wind and ghosts.

That'll do for now: enjoy!

Download SuperDuper! 3.2 B2